Our project brings the latest AI and machine learning technologies together to assist visually impaired individuals. With a combination of hardware and software, our device offers advanced features for safer navigation, enhanced communication, and improved accessibility.

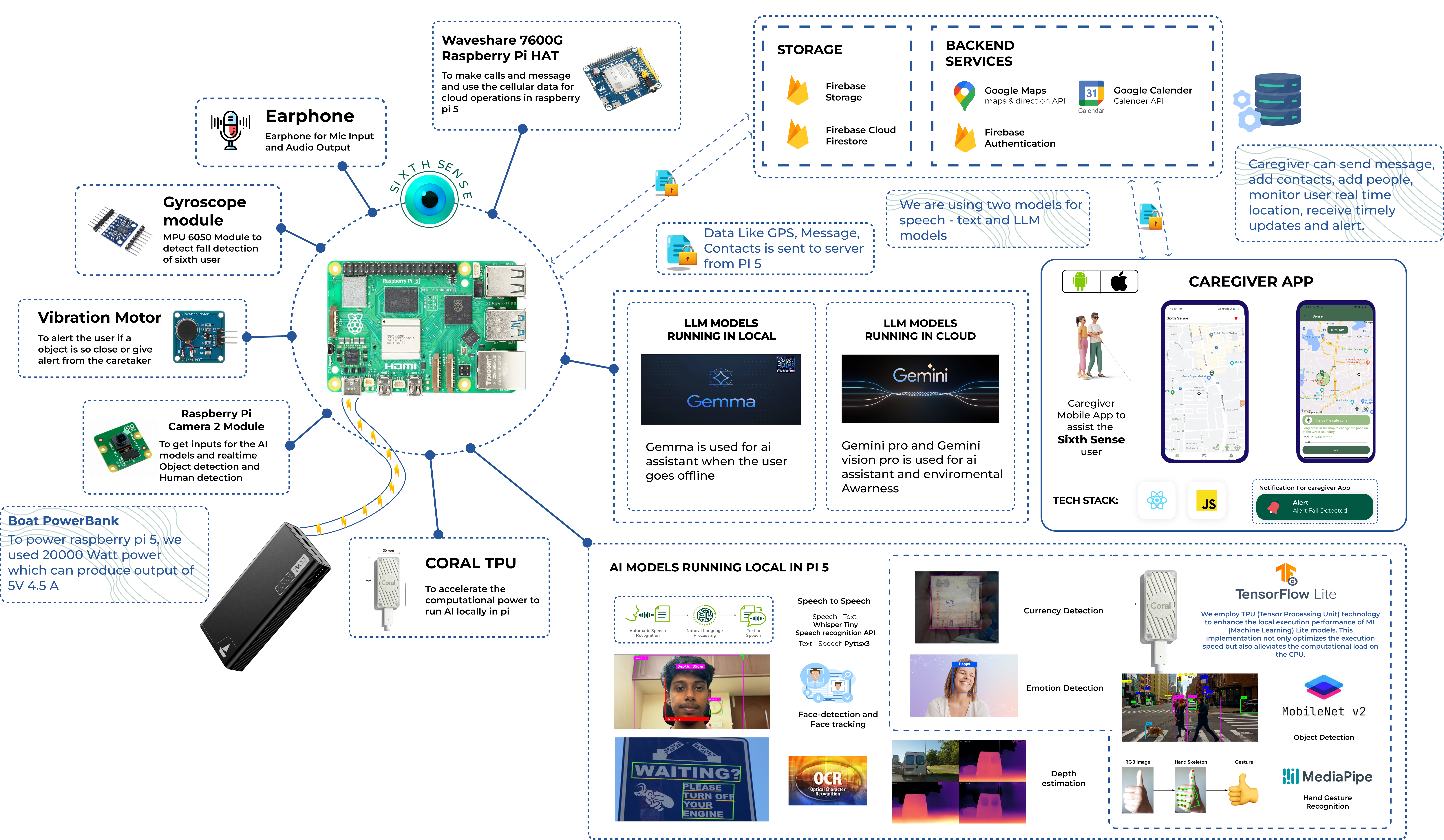

The device uses a Coral TPU, Raspberry Pi, GPS, and a camera to detect objects, recognize faces, and estimate distances. Speech-to-text and text-to-speech capabilities allow for easy interaction, while gesture-based controls provide a seamless user experience. Whether it's navigating the streets, identifying people, or managing daily tasks, our project is designed to empower users with greater independence.

Discover a new world of accessibility through our AI-driven technology. This project seamlessly integrates machine learning to empower visually impaired individuals, offering innovative solutions for enhanced navigation, safety, and communication. Experience a transformative approach to everyday tasks with features designed to improve independence and efficiency.

View AllOur project integrates two powerful models, Gemini and Gemma, to provide assistance to visually impaired individuals. When online connectivity is available, Gemini is initiated to answer questions and provide information effectively. However, in situations where online connectivity is not established, Gemma seamlessly takes over to ensure uninterrupted assistance.

In this system, we integrate emotion detection technology with facial recognition to assist visually impaired individuals in perceiving the emotions of people around them. When a known person stands in front of a blind individual, our system utilizes a camera feed to recognize their facial expressions. This recognition process is initiated only when the system identifies a familiar face.

We have built a speech-to-text system tailored for individuals with visual impairments. Our system seamlessly integrates with Google's powerful speech recognition technology, allowing users to convert spoken words into text format.

We have integrated Google Calendar API in our Sixth Sense to provide vital resources for visually impaired individuals, enabling them to manage schedules and tasks effortlessly through voice commands. With the ability to create events on specific days and inquire about their schedule for any given day, users gain instant access to crucial information, enhancing their organization and time management skills.

Our innovative approach leverages Google services to create a seamless experience for visually impaired users. By integrating Google Calendar for event management, Google Maps for navigation, and Google Assistant for voice interaction, we provide a dynamic and personalized user experience. This combination ensures users can access essential services with ease and confidence, all powered by Google's robust technology suite.

The Raspberry Pi 5 employs multiple communication protocols to interface with a diverse range of sensors and modules. Notably, the Coral TPU and earphones connect via USB, while the SIM7600 utilizes both UART and USB for robust network connectivity. The gyroscope employs I2C for precise motion sensing, and the camera is linked via PCIe for high-speed data transfer and advanced imaging capabilities. Meanwhile, the vibration module interfaces with GPIO pins for tactile feedback. This comprehensive integration ensures seamless data exchange and enhances the Raspberry Pi 5's functionality across various domains.

We have developed a caregiver application that facilitates real-time messaging for visually impaired individuals. Additionally, the app provides real-time tracking of the blind, and triggers geofencing alerts when a user moves beyond a specified range. These alerts are sent through the application. Furthermore, the messaging system supports bidirectional communication, allowing for mutual calls. To broaden its appeal and accessibility, the application also offers multilingual support.

Meet the talented team behind our innovative project designed to assist visually impaired individuals. Our experts are committed to developing advanced AI-based solutions that enhance navigation, communication, and safety for those with visual impairments.

Our team combines software and hardware expertise to create a device that seamlessly integrates machine learning, object detection, and voice recognition. We work tirelessly to ensure our product is intuitive and accessible, empowering visually impaired users to interact with the world with confidence and independence.